Jensen Huang, CEO of Nvidia, offers insights into the complexities surrounding Artificial General Intelligence (AGI) and addresses the pressing issue of AI hallucinations. His nuanced approach emphasizes defining AGI benchmarks and advocating for rigorous research to mitigate misinformation. Through strategies like “retrieval-augmented generation,” Huang seeks to ensure AI-generated responses align with factual accuracy. As discussions on AGI continue, Huang’s perspectives underscore the importance of ethical AI development and responsible decision-making to navigate the evolving landscape of artificial intelligence effectively.

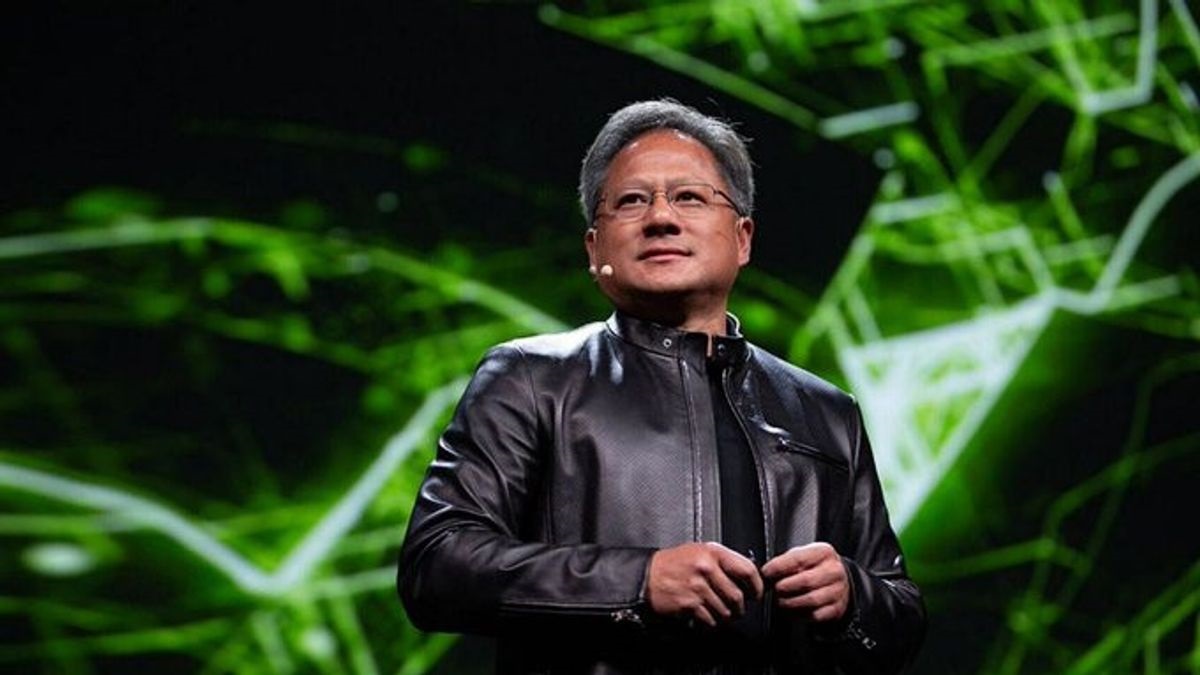

At Nvidia’s GTC developer conference, CEO Jensen Huang delves into the realm of Artificial General Intelligence (AGI) and confronts the challenges posed by AI hallucinations. Amidst concerns surrounding AGI’s unpredictable nature and ethical implications, Huang navigates the discourse with pragmatic insights. By dissecting AGI’s definition and proposing solutions to address AI hallucinations, Huang offers a roadmap for advancing AI development responsibly. Through his discourse, Huang underscores the imperative of aligning AI progress with human values and societal well-being.

Addressing the Quest for Artificial General Intelligence

The Concept of Artificial General Intelligence

Artificial General Intelligence (AGI), often labeled as “strong AI” or “human-level AI,” signifies a pivotal advancement within the domain of artificial intelligence. Unlike narrow AI, tailored for specific tasks, AGI possesses the capability to undertake a wide array of cognitive tasks at or surpassing human proficiency levels.

Jensen Huang’s Remarks at Nvidia’s GTC Conference

At Nvidia’s annual GTC developer conference, CEO Jensen Huang found himself repeatedly confronted with inquiries concerning AGI. Despite his recurrent engagement with the topic, Huang expressed a sense of weariness, attributing it to frequent misquotations and sensationalist interpretations by the press.

The Existential Concerns Surrounding AGI

The prevalence of questions regarding AGI reflects a broader societal unease regarding the implications of its advent. AGI raises existential questions about humanity’s position and control in a future where machines may surpass human capabilities across various domains. A central concern pertains to the unpredictability of AGI’s decision-making processes, which may not align with human values or priorities, potentially leading to unforeseeable consequences.

Challenges in Predicting AGI’s Arrival

Predicting the timeline for the emergence of AGI presents a formidable challenge, as Huang elucidates. The ambiguity stems from varying interpretations of AGI’s definition. Drawing parallels to familiar scenarios like recognizing the onset of a new year or reaching a destination, Huang emphasizes the importance of establishing clear criteria for measuring AGI’s attainment.

Towards Defining AGI and Predicting Its Emergence

Huang’s Perspective on AGI Definition

Huang proposes a nuanced approach to defining AGI, suggesting that its realization hinges on specific benchmarks or tests. He conjectures that if AGI is delineated by criteria such as excelling in standardized tests or surpassing human performance by a certain margin, achieving it could be feasible within five years.

Addressing the Phenomenon of AI Hallucinations

During the conference’s Q&A session, Huang fielded a question regarding AI hallucinations, instances where AI generates plausible yet factually incorrect responses. Expressing evident frustration, Huang advocates for a solution rooted in rigorous research and validation.

Proposed Solution: Retrieval-Augmented Generation

Huang advocates for a method termed “retrieval-augmented generation” to mitigate AI hallucinations. This approach entails prioritizing well-researched responses by verifying information from credible sources. Emphasizing the importance of discerning factual accuracy, Huang underscores the necessity for AI to conduct research before generating responses.

Ensuring Accuracy in Mission-Critical Responses

For critical inquiries, such as health-related advice, Huang recommends cross-referencing multiple reputable sources to ascertain accuracy. Acknowledging the limitations of AI, he suggests incorporating mechanisms for admitting uncertainty when confronted with ambiguous or incomplete information.

Jensen Huang’s discourse on AGI and AI hallucinations illuminates key considerations in navigating the future of artificial intelligence. By advocating for clear benchmarks in defining AGI and promoting research-driven approaches to combat misinformation, Huang provides a framework for responsible AI development. As the quest for AGI continues, Huang’s insights underscore the importance of ethical considerations and proactive measures to ensure AI advancements benefit society. By fostering transparency, accountability, and alignment with human values, Huang’s vision offers a promising path forward in the ever-evolving landscape of artificial intelligence.